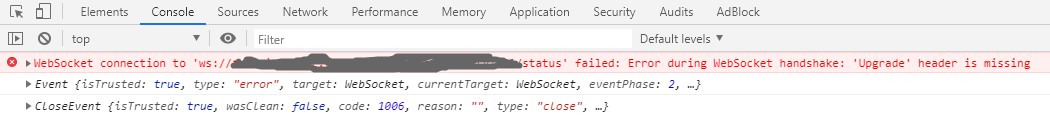

升级Kong 1.1.1到1.2.0后,原来工作正常的WebSocket连接不上了,前端提示:’Upgrade’ header is missing

Server端提示:

Server端提示:

System.Net.WebSockets.WebSocketException (2): The remote party closed the WebSocket connection without completing the close handshake.

Google了下应该是Kong 1.2.0的bug:

Websocket Upgrade header missing after upgrade to 1.2.0

fix(proxy) do not clear upgrade header (case-insensitive), fix #4779 #4780

但Milestone是1.2.2, 还没有发布. 紧急有效的修复方法是从github中拿到修复后的handler.lua文件, 创建configmap:

apiVersion: v1

data:

handler.lua: |-

-- Kong runloop

--

-- This consists of local_events that need to

-- be ran at the very beginning and very end of the lua-nginx-module contexts.

-- It mainly carries information related to a request from one context to the next one,

-- through the `ngx.ctx` table.

--

-- In the `access_by_lua` phase, it is responsible for retrieving the route being proxied by

-- a consumer. Then it is responsible for loading the plugins to execute on this request.

local ck = require "resty.cookie"

local meta = require "kong.meta"

...

kind: ConfigMap

metadata:

name: kong-1.2.0-0-runloop-handler.lua

namespace: kong

然后在kong daemonset/deployment中把configmap挂载到路径/usr/local/share/lua/5.1/kong/runloop/handler.lua:

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app: kong

name: kong

name: kong

namespace: kong

spec:

selector:

matchLabels:

name: kong

template:

metadata:

labels:

app: kong

name: kong

spec:

containers:

- env:

- name: KONG_PLUGINS

value: bundled,q1-api-auth,q1-user-auth,q1-user-check-permission

- name: KONG_PROXY_ACCESS_LOG

value: /dev/stdout

- name: KONG_PROXY_ERROR_LOG

value: /dev/stderr

- name: KONG_ADMIN_LISTEN

value: 0.0.0.0:8001, 0.0.0.0:8444 ssl

- name: KONG_PROXY_LISTEN

value: 0.0.0.0:8000, 0.0.0.0:8443 ssl

- name: KONG_STREAM_LISTEN

value: 0.0.0.0:9000 transparent

- name: KONG_DATABASE

value: postgres

- name: KONG_PG_DATABASE

value: kong

- name: KONG_PG_USER

value: kong

- name: KONG_PG_PASSWORD

value: PASSWORD

- name: KONG_PG_HOST

value: 192.168.130.246

- name: KONG_PG_PORT

value: "5432"

image: kong:1.2.0-centos

imagePullPolicy: IfNotPresent

name: kong

ports:

- containerPort: 8000

name: kong-proxy

protocol: TCP

- containerPort: 8443

name: kong-proxy-ssl

protocol: TCP

- containerPort: 8001

name: kong-admin

protocol: TCP

- containerPort: 8444

name: kong-admin-ssl

protocol: TCP

- containerPort: 9000

name: kong-stream

protocol: TCP

volumeMounts:

- mountPath: /usr/local/share/lua/5.1/kong/utils

name: kong-utils

- mountPath: /usr/local/share/lua/5.1/kong/plugins/q1-api-auth

name: q1-api-auth

- mountPath: /usr/local/share/lua/5.1/kong/plugins/q1-user-auth

name: q1-user-auth

- mountPath: /usr/local/share/lua/5.1/kong/plugins/q1-user-check-permission

name: q1-user-check-permission

- mountPath: /usr/local/lib/luarocks/rocks/kong/1.2.0-0/kong-1.2.0-0.rockspec

name: kong-rockspec

subPath: kong-1.2.0-0.rockspec

- mountPath: /usr/local/share/lua/5.1/kong/runloop/handler.lua

name: runloop-handler-lua

subPath: handler.lua

dnsPolicy: ClusterFirst

imagePullSecrets:

- name: docker-secret

nodeSelector:

beta.kubernetes.io/os: linux

kubernetes.io/role: node

restartPolicy: Always

volumes:

- configMap:

name: kong-utils

name: kong-utils

- configMap:

name: q1-api-auth

name: q1-api-auth

- configMap:

name: q1-user-auth

name: q1-user-auth

- configMap:

name: q1-user-check-permission

name: q1-user-check-permission

- configMap:

name: kong-1.2.0-0.rockspec

name: kong-rockspec

- configMap:

name: kong-1.2.0-0-runloop-handler.lua

name: runloop-handler-lua

问题解决